| Version 74 (modified by , 12 years ago) ( diff ) |

|---|

BluePrint: Text Search

Table of Contents

Introduction

Name : Vishrut Mehta

github : https://github.com/VishrutMehta

IRC Handle : vishrut009

Email id: vishrut.mehta009@gmail.com

skype: vishrut009

The Blueprint outlines the development of two functionalities for Sahana Eden. They are:

Full-text Search

- It will provides users to search for text in uploaded documents.

Global Search

(Do this if time permits)

- It will provide users to search for a particular query really quick over multiple resources together(eg. Organization, Hospital, etc.).

(Note: For the GSoC Project, we will try to make the basic structure of the global search and extending its functionality and a nice UI display will be out of the scope of this project. This work can be done as a post GSoC work)

Stakeholders

- The End Users - The group of end users are a heterogeneous mass and would like to search to any type of specific queries.

- Site Administrator, Site Operators,

Agencies like:

- TLDRMP : Timor Leste Disaster Risk Management Portal

- CSN : Community Stakeholder Network

- LAC: Los Angeles County

- IFRC : International Federation of Red Cross and Red Crescent Societies

Use Cases and User Stories

For Full-Text Search:

For Unrelated Search

- As a user, I want -

To be able to search through contents into ALL the documents as a user I may not know the resource or relevant module to search into.

- As a developer or an admin, I want -

expand and would like to test my search results and increase the efficiency of the search result.

For Related Search

- As a user, I want -

To be able to search through contents of the uploaded document into relevant resource as I would be somewhat familiar with the system and would know where to search for what.

- Many agencies would like to have there search method efficient. So as Eden does not have a search functionality to search Into the documents, by this project we would try to implement this, so for users convenience and efficiency of the search, we would add this functionality.

- As a developer, I would like to -

expand the current functionality and increase the efficiency of the search results.

For Global Search:

- The user will type the query of the upper right corner of the the page, where the global search option will be there. So as a User -

I may want quick and rough results of every records of all resource, rather going to a particular resource and try to search in that. This will provide the user rough set of search list to the user of the different resources. This is a User Convenience to have a single search box which searches globally across the site. As Fran told me, this concept comes from websites rather than applications, but users expect this kind of convenience.

- As an agency,

The current IFRC template design has this functionality, so as a part of this project, I will try to accomplish this, but will be a lower priority than the document search

- The users may want to enter context hints in the global search and would like to search for it. For eg. If I enter "staff" into the search box, then I want to get a list of staff - or at least a list of links to the pages relevant for "staff". (beyond the scope of GSoC)

Use Case Analysis

Target Problem

The Full-Text Search will try to solve two problem:

- To find one particular item(document/record).

- To find all items Relevant for a task the user is to perform.

The Global Search will solve the following problem:

- To search for a particular item through all the Resources.

- The Use Case for this is the IFRC UX design contains the global search query box, so we would add this functionality to these type of agencies.

Benefit to Sahana Eden

- A new functionality for searching text into the documents will be implemented as a part of this project. Till now no such system exits. So when the user wants to search for a particular document/report, relevant text search should be able to give efficient search results.

- For IFRC, the UX design contains a Global Search option on its upper right corner. This will not be an urgent priority(could be done in the third trimester).

Requirements

Functional

For Full Text Search:

- Proper understanding and the work model of S3Filter is required.

- Literature study of Apache Solr and Lucene(Pylucene which is a wrapper around lucene) . Getting familiar with both of the and deploy it into my local machine.

- Studying the linkage of the Lucene daemon and web2py server.

- Extend the functionality of S3Filter by introducing an addition feature (which is a text field) to search for text through documents.

- A user interface for displaying the search result.

For Global Search

- Appropriate understanding for the S3Filter, and we would use S3TextFilter after configuring the context links, so need to learn that also..

- Need to search for a particular resource over all the resources.

- Efficient search mechanism to search over all the resource.

System Constraints

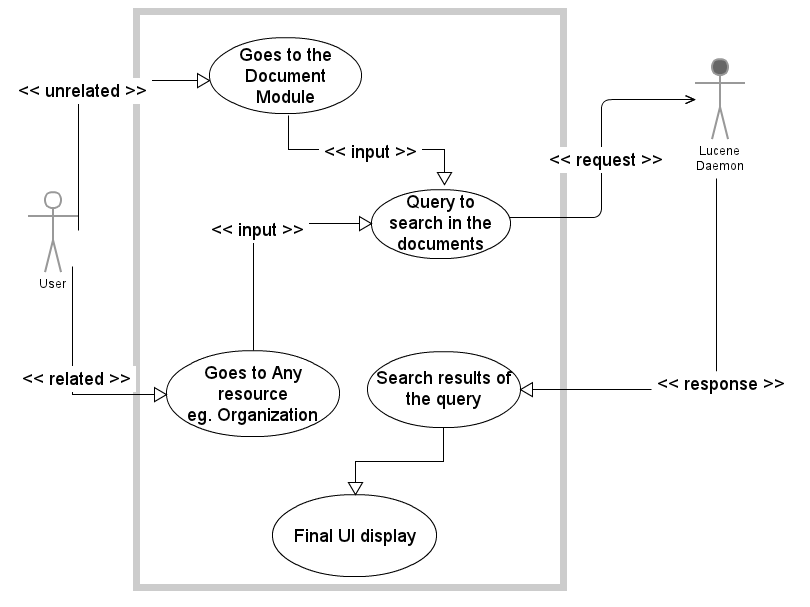

Use-Case Diagram

Design

Workflows

For Full-Text Search:

- The workflow will be according to the use case described above. First the user should need to analyze whether he wants to search through all the uploaded documents or any resource specific document.

Unrelated Search:

- The user should go the the Document -> Search.

- For Simple Search: Tick the checkbox to search through uploaded documents.

- For Advanced Search: Type the following query(string) he wants to search for.

- The output will be the response of the Lucene Daemon running in the background.

- The response will be displayed in the form as the search result for that query.

Related Search:

- This type of search is to search for text in uploaded documents over multiple resources.

- Go to that particular resource(eg. Hospital, Organization, etc) -> Search.

- You have to type the particular resource in which you want to search(normal search) and also string in the text filter for document search.

- For Simple Search: Tick the checkbox to search through uploaded documents in related resources.

- For Advanced Search: It will search on the basis of the resource associated to the document and the search string for the document search.(So we will limit our search to only those documents for which a particular resource is associated.)

- The response will be recorded and displayed as an output. Here there will be two categories of output. The first one would be the normal output of the search filter form and after that we would show the search results for the output of the full-text based query (the display/UI would be same as for unrelated search).

For Global Search:

- The user want to search through all the resource, then he will go to the global search option on the upper right corner of IFRC template.

- The user need to type the resource or text he wants to search for.

- The back-end processing happens and efficiently filters the resources and displays the output.

- The main task on how to categorize the output. We will divide the output form into category of resources in alphabetical order. For eg. Assets, CAP, GIS, Hospitals, etc.. So the category heading would be this resource and the output will be the corresponding records which match the search request.

Site Map

Wireframes

todo

Technologies

The technology going to be used are:

- S3Filter, S3TextFilter, S3Resource and S3ResourceFilter

The resource for these are here:

http://eden.sahanafoundation.org/wiki/S3/FilterForms

- Apache Solr and Lucene(Pylucene which is a wrapper around lucene)

A comparison analysis was done here whether to choose between Apache Lucene or Apache Solr.

http://www.alliancetek.com/Blog/post/2011/09/22/Solr-Vs-Lucene-e28093-Which-Full-Text-Search-Solution-Should-You-Use.aspx

Solr is a platform that uses the Lucene library, the only time it may be preferable to use Lucene is if you want to embed search functionality into your own application. So I choose Lucene for indexing the documents and search string in those documents.

Apache Lucene is a high-performance, full-featured text search engine library written entirely in Java. It is a technology suitable for nearly any application that requires full-text search, especially cross-platform.

Refer this for more information about its functtionalities:

http://lucene.apache.org/core/

Permission Management

- The document links are authorized as per the configured system policy, which will remain in place.

- For performance reasons, we should first select the documents by accessible_query, and only then search through them (so the search engine doesn't search through documents which the user is not permitted to access anyway).

- Certain user roles shall only have access to certain types of documents, which is not covered by Auth mechanism.

- I think the first step is to collect some detailed requirements around document search authorization, and then look into possible solutions.

- Also note that some documents may include Auth rules of their own.

Planned Implementation

Full-Text Search

- As suggested by Dominic, First, we need to send the uploaded documents to the indexer(to a external content search engine like Solr/Pylucene) in onaccept.

- We need to make a Filter Widget for upload files which would be a simple text field for Advanced search and checkbox for simple search.

- We need to extend the functionality of the S3ResourceFilter after extracting all result IDs in S3Resource.select .

- Then after extracting all the id's, it would identify all the document content filters, then will extract the file system path and then run them through the external contents search engine (Apache Solr or Lucene(Pylucene whixh is a wrapper around lucene) ), which would in turn return the IDs of the matching items along with the documents.

- Along with the IDs, we also need the snippet of the matching text in the respective document (as there in Google Search)

- After running the master query against the record IDs which we obtained from filtering, we combine the results and show up it in the UI.

- We also need to have a user friendly UI for the users(take inspiration from the Google or Bing! search results.)

- The main thing we could focus on is the efficiency of the search, as we know it will be Computationally challenging to perform accurate search.

- After the response, the part which remains will be displaying the search results in a proper user friendly format.

- The UI display for the search record would be something like:

Result:

<file type(in superscript)><The line or title containing the query(string to be searched) text> <Resource>

The path of the file, file name and uploaded date-time

<the snippet of 2-3 lines of that document>

This will be format for the UI display for each matched record.

Future Implementation:

- UI is a secondary concern for how to display the search result. We could take inspiration from the Google and Bing! Search results for an attractive UI format.

Global Search

- At first, we need to index the uploaded document by defining a new onaccept method for this global search.

- We will look a the design implementation of IFRC template, and add the global search text box using S3Filter and S3TextFilter.

- We would need to flatten the records and its components into a text representation.

- After doing this, we need to include the important information of the resource as well as the important information of its component in form of List.

For eg. list(or a dictionary) would be like "Sectors: Food, Something Else, Another Sector" "Type: Organisation, Name: <name of org>"

- After getting this information, this is when Solr will come into play and will do the full-text search on these information. So we wold get the information of the search results doing the REST calls and thus we need to output the search results.

- After doing this and filtering out the common context, we would need a UI to display it. It would be simple, user friendly and categorized. Showing it in a much better way would be for future implementation.

- The UI presentation will be a Categorized display, something like:

Search: DRR

Result:

Sector "DRR":

(Records corresponding to this)

- 5 Projects related to "DRR"

- 7 Programmes related to "DRR"

- 3 Events related to "DRR"

Location "Taiwan DRR Centre":

- 1 Event related to "Taiwan DRR Centre"

- 5 Staff Members related to "Taiwan DRR Centre"

...and so forth.

Testing

This will include two type of tests:

1) Selenium tests for correctness of the code and the Eden framework

2) Unit tests for efficiency test(it will also cover manual tests to check the efficiency of the search results)

Description of Work Done

- Pylucene

- Installation details are here: http://eden.sahanafoundation.org/wiki/BluePrint/TextSearch#Pylucene

- Apache Solr

- The installation details are here:

- Solr distribution

- Installation

- Tutorial

- Prerequisites:

- Java, at least 1.6

- Unzip the distribution.

- You can, for now, use the setup in the example directory, though this contains sample data that we won't want.

cd examplejava -jar start.jar- Open in browser: http://localhost:8983/solr/

- The installation details are here:

- Sunburnt

- The script Attached below installs the dependencies and also configures and installs Apache Solr and Sunburnt

- The script Attached below installs the dependencies and also configures and installs Apache Solr and Sunburnt

- Solr Configuration and Schema changes

- I have attached an installation script for installing all the dependencies and the solr configuration.

- You can also install manually.

- These are the following dependencies you would need to install:

- Antiword

wget http://www.winfield.demon.nl/linux/antiword-0.37.tar.gz tar xvzf antiword-0.37.tar.gz cd antiword-0.37 make

- Pdfminer

wget http://pypi.python.org/packages/source/p/pdfminer/pdfminer-20110515.tar.gz tar xvzf pdfminer-20110515.tar.gz cd pdfminer-20110515 python setup.py install

- Pyth

wget http://pypi.python.org/packages/source/p/pyth/pyth-0.5.6.tar.gz tar xvzf pyth-0.5.6.tar.gz cd pyth-0.5.6 python setup.py install

- Httplib2

apt-get install python-httplib2

- xlrd

wget http://pypi.python.org/packages/source/x/xlrd/xlrd-0.9.2.tar.gz tar xvzf xlrd-0.9.2.tar.gz cd xlrd-0.9.2 python setup.py install

- libxml2, lxml> 3.0

apt-get install python-pip apt-get install libxml2 libxslt-dev libxml2-dev pip install lxml==3.0.2

- Antiword

- After installing all these dependencies, we would need to changed the solr config file, solr-4.3.x/example/solr/collection1/conf/solrconfig.xml

- Change the directory path, where you want to store all the indexes. This can be stored anywhere, but better to keep in the eden directory. For example, as mentioned in installation script:

<dataDir>/home/<user>/web2py/applications/eden/indices</dataDir>

- Change the directory path, where you want to store all the indexes. This can be stored anywhere, but better to keep in the eden directory. For example, as mentioned in installation script:

- You can also change this path according to you suitability, like if solr is on another machine, then the directory path would be different.

- Now, we will discuss the schema changes in file solr-4.3.x/example/solr/collection1/conf/schema.xml -

- Add the following in the <fields>..</fields> tag:

<fields> . . <field name="tablename" type="text_general" indexed="true" stored="true"/> <field name="filetype" type="text_general" indexed="true" stored="true"/> <field name="filename" type="text_general" indexed="true" stored="true"/> . . </fields>

- After adding this, after the <fields>..</fields> tag, add the following code for <copyfield>

<copyField source="filetype" dest="text"/> <copyField source="tablename" dest="text"/> <copyField source="filename" dest="text"/>

- So these are the configurations and the dependencies required for successful solr and sunburnt installation and integration in Eden.

- Add the following in the <fields>..</fields> tag:

- Enabling text search:

-> Uncomment the following line in models/000_config.py

# Uncomment this and set the solr url to connect to solr server for Full-Text Search settings.base.solr_url = "http://127.0.0.1:8983/solr/"

Specify the appropriate IP, like here it is 127.0.0.1

If you are running on different machine, then specify that IP accordingly.

- Asynchronously Indexing and Deleting Documents:

- The code for asynchronously indexing documents is in models/tasks.py

- Insertion: The code will first insert the document into the database. Then in callback onaccept it will index those documents calling the document_create_index() function from models/tasks.py . The following code should be added for enabling Full Text search for documents in any modules. The example is there, you can see modules/s3db/doc.py in document_onaccept() hook.

- Deletion: The code will first delete the record from the database table, then will select that file and will delete it from Solr also, by deleting its index which is stored in solr server. You can look for the code in modules/s3db/doc.py in document_ondelete() hook.

- In model()

if settings.get_base_solr_url(): onaccept = self.document_onaccept # where document_onaccept is the onaccept hook for indexing ondelete = self.document_ondelete # where document_onaccept is the onaccept hook for deleting else: onaccept = None ondelete = None configure(tablename, onaccept=onaccept, ondelete=ondelete, ..... - In onaccept()

@staticmethod def document_onaccept(form): vars = form.vars doc = vars.file # where file is the name of the upload field table = current.db.doc_document # doc_document is the tablename try: name = table.file.retrieve(doc)[0] except TypeError: name = doc document = json.dumps(dict(filename=doc, name=name, id=vars.id, tablename="doc_document", # where "doc_document" is the name of the database table )) current.s3task.async("document_create_index", args = [document]) return - in ondelete():

@staticmethod def document_ondelete(row): db = current.db table = db.doc_document # doc_document is the tablename record = db(table.id == row.id).select(table.file, # where file is the name of the upload field limitby=(0, 1)).first() document = json.dumps(dict(filename=record.file, # where file is the name of the upload field id=row.id, )) current.s3task.async("document_delete_index", args = [document]) return

Full Text Functionality

- The full text search functionality is integrated in modules/s3/s3resource.py, the fulltext() does the work.

- The flow is: First the TEXT query goes to transform() function, which would split the query recursively and then transform the query.

- After transforming, the query with TEXT operator will go to fulltext() function and would search for the keywords in the indexed documents.

- It will retrieve document ids and then covert into a BELONGS S3ResourceQuery.

- The code sample for the fulltext() is in models/s3/s3resource.py

Unit Test

- Unit Tests are important part of any code function to check ifs its working according to our expectation.

- For Full Text Search also, I have implemented it in modules/unit_tests/s3/s3resource.py - Class DocumentFullTextSearchTests

- To run the unit test, type in your web2py folder:

python web2py.py -S eden -M -R applications/eden/modules/unit_tests/s3/s3resource.py

- For checking the code in different situations, so all errors are removed, there are different tests implemented for it. The cases are:

- When Solr is available (Solr server is running normally)

- When Solr is unavailable (Solr server is not running, but enabled)

- Checking the output of query() function

- Checking the output of call() function

References

Mailing List Discussion

https://groups.google.com/forum/?fromgroups=#!topic/sahana-eden/bopw1fX-uW0

Chats and Discussions

http://logs.sahanafoundation.org/sahana-eden/2013-03-24.txt

http://logs.sahanafoundation.org/sahana-eden/2013-03-24.txt

http://logs.sahanafoundation.org/sahana-eden/2013-04-20.txt

Online Resources

http://lucene.apache.org/core/4_2_1/queryparser/org/apache/lucene/queryparser/classic/package-summary.html#Boosting_a_Term

http://lucene.apache.org/pylucene/features.html

http://oak.cs.ucla.edu/cs144/projects/lucene/

Pylucene

Installation on Debian/Ubuntu

- Prerequisites:

Java JDK ( OpenJDK or Oracle version-Sun )

Apache Ant

JCC

- For Java JDK:

Edit /etc/apt/sources.list, add the following line:

deb http://ftp2.de.debian.org/debian squeeze main non-free

Update

$ sudo apt-get update

Check for available Sun Java Packages:

$ sudo apt-cache search sun-java6

Output:

sun-java6-bin - Sun Java(TM) Runtime Environment (JRE) 6 (architecture dependent files)

sun-java6-demo - Sun Java(TM) Development Kit (JDK) 6 demos and examples

sun-java6-doc - Sun JDK(TM) Documention -- integration installer

sun-java6-fonts - Lucida TrueType fonts (from the Sun JRE)

sun-java6-javadb - Java(TM) DB, Sun Microsystems' distribution of Apache Derby

sun-java6-jdk - Sun Java(TM) Development Kit (JDK) 6

sun-java6-jre - Sun Java(TM) Runtime Environment (JRE) 6 (architecture independent files)

sun-java6-plugin - The Java(TM) Plug-in, Java SE 6

sun-java6-source - Sun Java(TM) Development Kit (JDK) 6 source files

Now Install:

$ sudo apt-get install sun-java6-bin sun-java6-javadb sun-java6-jdk sun-java6-plugin

In order to accept the License Agreement, navigate to the Accept field with TAB.

Make Sun Java the Java runtime of your choice:

$ update-java-alternatives -s java-6-sun

Check, if Java could be properly installed:

$ java -version

java version "1.6.0_22"

Java(TM) SE Runtime Environment (build 1.6.0_22-b04)

Java HotSpot(TM) 64-Bit Server VM (build 17.1-b03, mixed mode)

Set Environment Variable $JAVA_HOME

Edit file /etc/profile, add:

JAVA_HOME="/usr/lib/jvm/java-6-sun"

export JAVA_HOME

DONE :)

- Apache Ant

-> Download the source from: http://ant.apache.org/bindownload.cgi

Uncompress the downloaded file into a directory.

Set ANT_HOME to the directory you uncompressed Ant to.

Add ${ANT_HOME}/bin (Unix) to your PATH by:

$ export PATH=${PATH}:${ANT_HOME}/bin

From the ANT_HOME directory run:

$ ant -f fetch.xml -Ddest=system

to get the library dependencies of most of the Ant tasks that require them.

After successfully installing these pre-requisites, now:

$ cd ./pylucene-x.x.x/

$ cd jcc

$ <edit setup.py to match your environment>

Open setup.py, and search for “JDK”. Update the JDK path to what you found in step 3.

51 JDK = {

52 'darwin': JAVAHOME,

53 'ipod': '/usr/include/gcc',

54 #'linux2': '/usr/lib/jvm/java-6-kopenjd',

55 'linux2': '/usr/lib/jvm/java-6-sun',

56 'sunos5': '/usr/jdk/instances/jdk1.6.0',

57 'win32': JAVAHOME,

58 'mingw32': JAVAHOME,

59 'freebsd7': '/usr/local/diablo-jdk1.6.0'

60 }

Run:

$ python setup.py build

$ sudo python setup.py install

$ cd ..

<edit Makefile to match your environment>

$ vim Makefile

Uncomment the following:

90 # Linux (Ubuntu 11.10 64-bit, Python 2.7.2, OpenJDK 1.7, setuptools 0.6.16)

91 # Be sure to also set JDKlinux2 in jcc's setup.py to the JAVA_HOME value

92 # used below for ANT (and rebuild jcc after changing it).

93 PREFIX_PYTHON=/usr

94 ANT=JAVA_HOME=/usr/lib/jvm/java-6-sun /home/user/apache-ant-1.9.1/bin/ant

95 PYTHON=$(PREFIX_PYTHON)/bin/python

96 JCC=$(PYTHON) -m jcc --shared --arch x86_64

97 NUM_FILES=200

And make the following chances by looking at the above code.

Now, run:

$ make

This ‘make’ is very very slow.. On my dual core laptop, it took about 15 minutes to complete.

$ sudo make install

$ make test (look for failures)

Successfully Installed PyLucene, Hurray!! :D

Links: http://ant.apache.org/manual/install.html

Apache Solr

Prerequisites:

- Java, at least 1.6

Not sure this is needed:

- GCC compiler:

sudo apt-get install gcc g++

Briefly:

- Unzip the distribution.

- You can, for now, use the setup in the example directory, though this contains sample data that we won't want.

cd examplejava -jar start.jar- Open in browser: http://localhost:8983/solr/

Eden configuration changes:

Attachments (1)

- search.png (32.4 KB ) - added by 12 years ago.

Download all attachments as: .zip